AI Chain System Design

Promptmanship: process, concepts, activities, and patterns

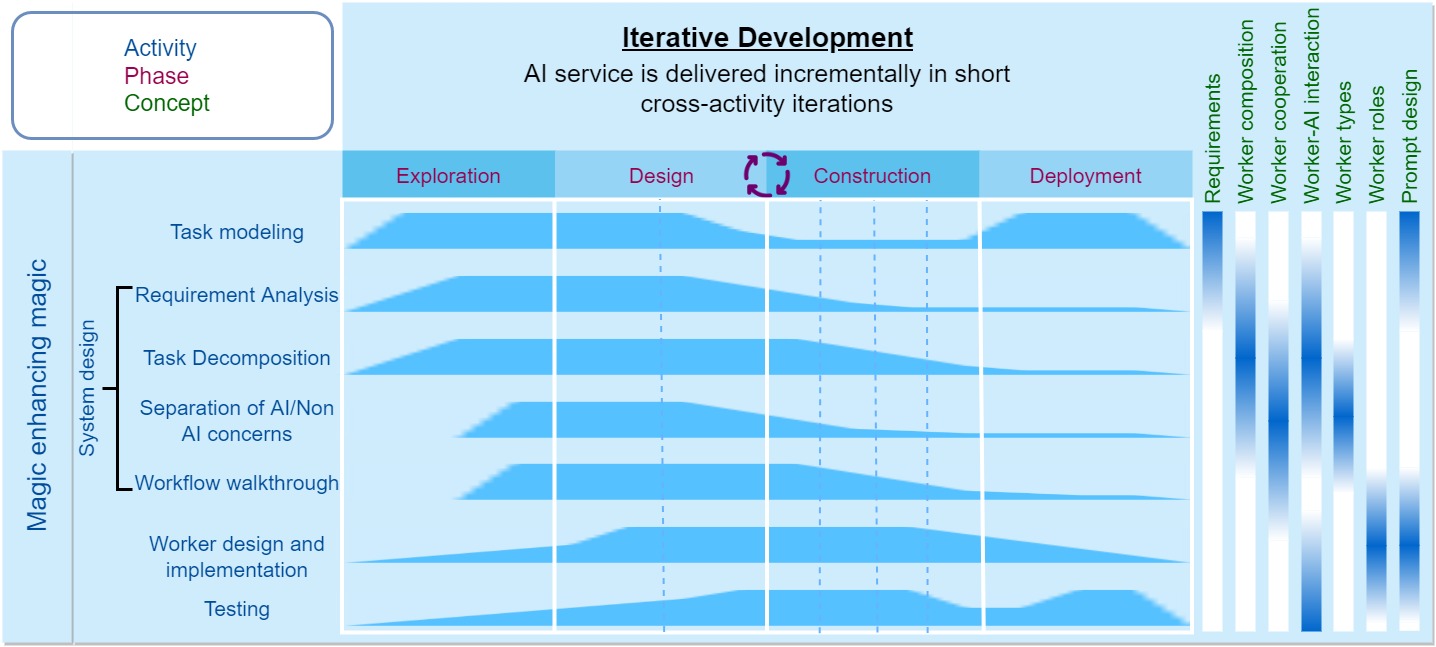

The AI chain system design starts from the exploration phase and fully unfolds in the design phase. The AI chain design and construction phases are highly iterative, and the AI chain system design will be iteratively optimized based on the actual running results of the AI chain.

This activity is a continuation and a starting point: a continuation in the sense that it clarifies and refines the initial (vague) ``what we want'' and the approximate task model into specific AI chain requirements through requirement analysis, and a starting point in the sense that it produces the AI chain skeleton as the foundation for implementing the AI chain through task decomposition, separation of AI and non-AI concerns, and workflow walk-through. These three activities adopt the basic principles of computational thinking and the modular design of software engineering, and will determine worker composition together with work-AI interaction modes, worker types (Software 3.0/2.0/1.0), and worker cooperation (see the definitions of these concepts in AI Chain Concepts), forming a skeleton of AI chain.

Requirement Analysis

The initial AI chain requirements provided by AI chain engineers are often incomplete or ambiguous. Building the AI chain on such requirements makes it difficult to ``build the right AI chain''. In software projects, it is usually necessary to clarify and refine requirements through communication between product managers and users. We achieve this through an LLM-powered requirement co-pilot (implemented in the IDE's Design view). This co-pilot is a Reverse Questioner who engages in open-ended questioning with engineers around their initial requirements and task notes collected by the note-taking copilot in the Exploration view. Based on the engineer's answers, the requirement co-pilot iteratively rewrites and expands the AI chain requirements.

Task Decomposition

When given a complex multiplication, such as 67*56, many people may not be able to provide the answer immediately. However, this does not mean that humans lack the ability to perform two-digit multiplication. With a pen and paper, it is not too difficult to stepwise calculate 60*50+7*50+60*6+7*6 and arrive at the final answer.

The same principle applies when working with foundation models. When a model cannot solve a problem successfully in a single generative pass, we could consider how humans would divide and conquer such complex problems. LLMs demonstrate strong task planning abilities (Ahn et al. 2022, Singh et al. 2022, Huang et al. 2022). During the exploration phase, consulting the LLM can often obtain inspiration for task decomposition. Our IDE's Design view is equipped with a Planner co-pilot to generate a sequence of major steps for accomplishing the task requirement, which can be regarded as initial task decomposition.

We can iteratively break down the problem into small, simpler ones. If a sub-task is still too complex, it can be further decomposed into simpler sub-tasks until each small problem can be handled by the model in a single generative pass. This results in a hierarchy of sub-tasks, each of which is accomplished by an AI chain worker (see PCR-Chain and our showcases in AI Chain Marketplace). If fine-grained tasks and coarse-grained tasks are the same, but only deal with increasingly simpler inputs, for example, substrings of a longstring, nested code blocks, then we can recursively call the same worker with simpler and simpler input until the model can handle it, similar to least-to-most prompting (Zhou et al. 2022).

For intermediate sub-tasks, we need composite workers corresponding to the L4 work-AI interaction mode. For leaf sub-task workers, we need to empirically determine the appropriate L1 to L3 interaction modes with increasing reasoning capability. In our showcases, leaf workers are generally L2 workers as they they take single responsbility and delegate workflow control to explicit worker 1.0 in the AI chain.

Task decomposition requires mechanical sympathy to align task characteristics with model capabilities. That is, the appropriate granularity for task decomposition depends on the scale and capabilities of the foundation model. For smaller models with weaker capabilities, tasks may need to be decomposed into finer granularity. As model size and capabilities increase, they are more likely to be competent in coarser-grained tasks.

An important question is whether we no longer need composite workers (i.e., task decomposition and AI chain), but only need a single worker with sufficient reasoning capabilities, as model capabilities scale up. We believe we will see a left-shift in worker-AI interaction modes. For a task that previously required a higher-level interaction mode, it can now be completed with a lower-level interaction mode as model capabilities improve. Or, tasks that previously required a very complex AI chain can now be completed with a simpler AI chain. At the same time, many tasks that previous models could not handle can be completed with AI chains and stronger models. However, we do not think AI chain engineering will disappear as model capabilities increase, because it is a fundamental problem solving and software engineering strategy on top of foundation models, rather than a specific AI capability which could be absorbed by the large language models. Stephen Wolfram wrote an insightful article on a related topic "Will AIs Take All Our Jobs and End Human History - or Not?".

Separation of AI and non-AI Concerns

An AI chain contains many workers, and not all workers need to be implemented based on the foundation models from both functional and economic perspectives (TALM, Toolformer, Taskmatrix.AI, HuggingGPT, ChatGPT Plugins). The economic perspective is an important factor to consider because foundation models usually require high-end computing resources or significant API usage expenses. We need to distinguish which workers need to leverage AI and which ones can use traditional programs. For AI-based workers, we further distinguish which ones need to use the foundation models and which ones can use traditional ML models.

Through separation of concerns, we can achieve single responsibility and modular design, meaning that each worker in the system has a clear and specific responsibility. This allows engineers to analyze and design different aspects of the AI service without being overwhelmed by its complexity. As a result, we can determine the most effective worker type for completing individial sub-tasks, whether it is completed by a Software 3.0, Software 2.0, or Software 1.0 worker. Due to the workers' modular design and single responsibility, a worker's software paradigm can be easily swapped without affecting other parts of the system. For example, we can replace a foundation model-based classifier with a performance-enhanced classifier fine-tuned on task data, or vice versa, replace a task-specific classifier trained on specific data with a foundation model-based classifier with better generalizability.

For Software 2.0 or Software 1.0 workers, we need to determine the external knowledge base, task-specific models, tools or APIs to be used to implement the workers (see AI chain implementation).

For Software 3.0 workers, the core task is to determine worker stereotype and design prompt. Single responsibility worker makes it easier to determine a worker's distinct role and simplify its prompt design. Our Sapper IDE is equipped with a prompting co-pilot to create prompts when generating AI chain steps from the task requirement. Software 3.0 workers can be enhanced by external knowledge bases, tools or APIs (Liu et al. 2021, Borgeaud et al. 2022, TALM, PAL, Program of Thoughts, Toolformer, Taskmatrix.AI, HuggingGPT, ChatGPT Plugins).

Workflow Walk-through

AI chain workers collaborate to complete tasks according to a workflow (see AI chain examples and our AI chain showcases). This requires algorithmic thinking to develop a step-by-step process for solving problems. We recommend using workflow patterns to model AI chain workflows. Note that a L3 worker expresses the workflow in its prompt internally, which does not support explicit worker cooperation.

The workflow needs to support control structures, such as conditional, loop, fork-join. For example, in order to avoid introducing errors into correct code, PCR-Chain only attempts to fix the code when the LLM determines that there are last-mile errors (judging whether the code has errors is easier than fixing the code).

Meanwhile, each worker needs to define a function signature in terms of input and output as well as any pre/post-condition constraints, akin to interface specification in software engineering, so that workers can interact or communicate with one another.